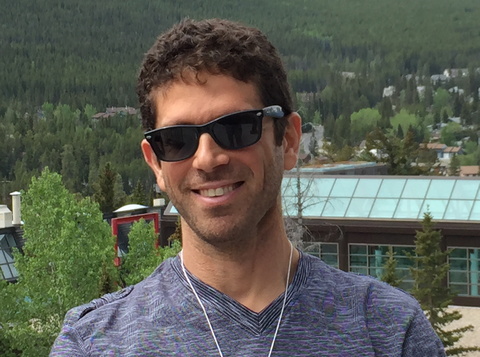

Assaf Zeevi is Professor and holder of the Kravis chair at the Graduate School of Business, Columbia University. His research and teaching interests lie at the intersection of Operations Research, Statistics, and Machine Learning. In particular, he has been developing theory and algorithms for reinforcement learning, Bandit problems, stochastic optimization, statistical learning and stochastic networks. Assaf’s work has found applications in online retail, healthcare analytics, dynamic pricing, recommender systems, and social learning in online marketplaces.

Assaf received his B.Sc. and M.Sc. (Cum Laude) from the Technion, in Israel, and subsequently his Ph.D. from Stanford University. He spent time as a visitor at Stanford University, the Technion and Tel Aviv University. He is the recipient of several teaching and research awards including a CAREER Award from the National Science Foundation, an IBM Faculty Award, Google Research Award, as well as several best paper awards including the 2019 Lanchester Prize. Assaf has recently served a term as Vice Dean at Columbia Business School and Editor-in-Chief of Stochastic Systems (the flagship journal of INFORMS’ Applied Probability Society). He also serves on various other editorial boards and program committees in the Operations Research and Machine Learning communities, as well as scientific advisory boards for startup companies in the high technology sector.

| Name of Speaker | Assaf Zeevi |

| Schedule | 11 March 2022, 10am – 11.30am

(60 min talk + 30 min Q&A) |

| Link to register

(Zoom) |

https://nus-sg.zoom.us/meeting/register/tZYtdOusrj4qGtYQnSrSqKvhRN7RjGgj8vM3 |

| Title | Online Learning in Sequential Selection Problems

(A Two Part Talk…) |

| Abstract | In this sequence of two (self-contained) talks, I will describe some recent work on learning theoretic formulations in sequential selection problems, focusing on two vignettes.

The first (to be covered in part 1) will focus on an optimal stopping problem: given a random sequence of independent observations revealed one at a time over some finite horizon of play, the objective is to design an algorithm that “stops’’ this sequence to maximize the expected value of the “stopped” observation. (Once the sequence is stopped there is no recourse and the game terminates.) When the (common) distribution governing the random sequence is known, the optimal rule is a (distribution-dependent) threshold policy that is obtained by backward induction; work on this problem has a long and storied history. Surprisingly, if one does *not* assume the distribution to be known a priori, there is fairly little work in extant literature, and the talk will develop this formulation, expound some of the challenges involved in its learning theoretic formulations, and an indication of what can (and cannot) be achieved in this setting. The second vignette (to be covered in part 2) will focus on a sequential stochastic assignment problem, which dates back roughly 50 years. In this problem a known number of sequentially arriving items, say, “jobs,” need to be assigned to a pool of, say, “workers,” and once each job is assigned to a worker, both job and worker are no longer admissible for further assignment. Each job is characterized by a quality / complexity indicator drawn independently from an underlying distribution, and each worker is characterized by a known “productivity coefficient” (for example, the effectiveness by which that person can process said job). The objective is to assign jobs to workers so that the expected overall work time required for performing all the jobs will be minimal. This formulation has been used extensively in the OR literature in a variety of application domains, and is increasingly relevant in the study of online marketplaces and matching markets. As in the case of the optimal stopping problem, when the ambient distribution is known a priori the optimal assignment policy is obtained using backward induction arguments. Naturally, in most realistic applications knowledge of this key problem primitive is not available, giving rise, again, to learning theoretic formulations which will be the main focus of this part of the talk. |